6 Testing of User Interface Design

This chapter summarizes testing of user interface design that has been performed in November 2012 as part of this thesis and subject User Interface Design [49]. This user testing was focused on user experience when working within the page view. It included several phases, e.g. screener, interviews, low fidelity testing and high fidelity testing.

6.1 Objective

Objective of this user testing was to improve existing user interface of the page tree view because several user experience difficulties had been detected in this area during development and use of the system. The page tree view is the most complex area of the administration designed for managing daily tasks like working with pages and content. The user testing took place in winter 2012 with two real users.

The testing process had three independent phases: initial phase with screener and interview, low-fidelity testing with a paper prototype, and high-fidelity testing with a real-world application. A list of ideas and improvements emerged from the testing. This list was later analysed and changes incorporated into the application. The tested version of the system was 2.0 for the low fidelity testing and 2.1 for the high fidelity testing. Corresponding updates have been implemented after the low fidelity testing in version 2.1 and after the whole testing in version 2.2.

6.2 Target Group

The target group for this project consists of both editors and common users. Editors are usually skilled in website administration and similar work. Editors typically work in a web design studio that builds websites for external clients or in a large company that maintains many own websites. Common user is a person that manages only his own personal or commercial website. A user of the Urchin CMS is expected to have some basic computer and internet skills like browsing web pages, using web forms, using e-mail, or working with a text editor. Basic knowledge of any content management system is also expected. Participants of the testing are selected using a screener and an interview.

6.3 Test Participants

6.3.1 User A

First participant is a 24-year-old male. He works in a web-design studio as an editor. His work is to code templates and manage content of various clients' websites. The aforementioned company uses its own content management system to develop web presentations and applications. He has also used another open-source system, Joomla, in her previous work.

6.3.2 User B

Second participant is a 31-year-old male. He works as a teacher in a language school. User B runs his personal website that offers translation and teaching services. He also manages his father's online portfolio. User B uses open-source Wordpress [48] to run both sites. He tried another free content management system as well.

6.4 Screener

Main purpose of a screener is to classify users and select users suitable for the interview. This screener consists of few questions connected to the project. Questions in the screener focus on basic skills required for working with a content management system.

6.4.1 Questions

A. How much are you skilled with a text processor (such as Word or Writer)?

- unable to use

- basic: creating and editing documents, simple formatting

- intermediate: using styles, inserting images, managing tables

- advanced: using macros and complex formatting, tracking changes

B. How much are you skilled with internet?

- unable to use

- basic: web browsing, search, e-mail use

- intermediate: shopping online, using internet banking, editing content

- advanced: coding templates, knowing HTML and CSS

- professional: server- or client-side programming

C. Do you have experience with at least one content management system?

- yes

- no

6.4.2 Selection of Participants

As discussed above, test participants are selected using a screener. To qualify himself, the participant must achieve these results in the screener:

- question A (computer skill): at least basic degree

- question B (internet skill): at least intermediate degree

- question C (content management system knowledge): yes

6.4.3 Screener with User A

The first list shows screener results for User A:

- computer skill: intermediate

- internet skill: advanced

- content management system knowledge: yes

6.4.4 Screener with User B

The second list shows screener results for User B:

- computer skill: intermediate

- internet skill: intermediate

- content management system knowledge: yes

6.5 Interview

Interview is used to get basic information about participants before the testing could begin. Acquired data are analysed and used to design scenarios for both parts of the testing.

6.5.1 Topics and Questions

Topics and questions include relaxed introduction and all important topics.

A. Use of internet

- How often do you connect?

- What do you search on the internet?

- How do you get links to interesting pages?

- Do you use tabbed browsing?

- Do you bookmark pages, use download manager, additional plug-ins?

B. Content management systems

- Where do you use a content management system?

- Which system do you use in your job or for your business?

- Have you tried other CMS systems? What is your experience with them?

- Do you use help or documentation if available?

C. Managing pages

- Do you prefer using a tree view or a plain list of pages?

- Do you prefer having pages for different language versions separated?

- Do you favour complexity over simplicity or vice-versa?

D. Managing content (news, articles, texts)

- Do you use a WYSIWYG editor or prepare content outside the system?

- Do you review content before publishing it online?

- Do you like the fact of having news or articles grouped together for easier manipulation?

- What kind of feedback do you expect on an unsuccessful action?

6.5.2 Interview with User A

The following list summarizes information about the first participant, User A.

A. Use of internet

- uses internet almost every day in his job and at home

- reads mostly news, articles about web design, travelling and animals

- uses mail, social networks, chat, watches videos

- searches for links or get them from friends via social networks

- uses 10-15 tabs

- bookmarks many pages, uses web design related plug-ins in job

B. Content management systems

- currently uses CMS only in his job

- uses proprietary CMS developed by the company he works in

- had used old version of Joomla in previous work

- experienced many problems with maintenance

- user interface and content editing was kind of awkward and buggy

- searches for help online or asks colleagues if in his work

C. Managing pages

- usually prefers a tree view, but uses also a plain list of pages

- is familiar with a single tree with for all pages in his company's CMS

- experiences some discomfort with that because many websites have same-named pages for multiple mutations (leads to editing errors)

- prefers complexity, likes to have most features in the page settings

D. Managing content (news, articles, texts)

- usually prepares content outside a system in HTML

- reviews content on a development server

- this server contains almost up-to-date copy of a live website

- likes grouping of content elements, uses this feature in his job

- expects warning with detailed explanation of a problem

- advanced feedback is better, even with more technical details

6.5.3 Interview with User B

The following list summarizes information about the second participant, User B.

A. Use of internet

- uses internet about every other day

- browses language forums, buys tickets, uses web-mail and Skype

- uses mainly search

- uses up to 5 tabs together

- has a stable number of bookmarked pages, does not change it much

B. Content management systems

- uses CMS for his and his father's websites

- uses open-source CMS Wordpress

- has installed Drupal about two years ago

- encounters problems with resource consumption on his web hosting

- does not use help, sometimes searches for online solution

C. Managing pages

- uses only a plain list of pages

- is content with this situation, manages only few pages

- does not have a tree view in his system, but would like to try it

- prefers simplicity over complexity, all actions he uses should be in page settings

D. Managing content (news, articles, texts)

- uses built-in WYSIWYG editor for formatting and adding images

- reviews content just after publishing it on live site

- likes the idea of having page preview in administration

- likes the idea of grouping news or articles together for simpler managing

- expects message with explanation

- dislikes if anything fails and he is not informed what happened

6.5.4 Summary of Information

Analysis of user interface design of a page tree view is based upon interviews and summary of information.

- different web presentations have separated tree view

- tree view is the default method how to manage pages

- content and page settings are managed mostly in the tree view

- content preview is provided

- user can choose between WYSIWYG editor and HTML source editing

- components are used for grouping elements and assigning them to the page

- expressive and detailed feedback is provided

6.6 User Scenarios

User scenarios are used for testing and cover operations that users perform in the content management system administration. Prerequisite for each scenario is that the user is logged in the administration and he has been shortly instructed about basic concepts of the system. The basic concepts include working presentations and pages, managing content using components and elements and brief introduction to user permission. The testing environment already includes several web pages, such as welcome page and services.

Scenarios cover four fundamental operations commonly performed in the page tree view. All operations are listed after this introductory text together with detailed description of each operation. However, during the testing participants are NOT given these detailed instructions, only short description of each task. If the participant had followed the instructions step-by-step, there would be nothing to test. Instead of that, the scenarios are designed to record user experience and find potential problems with usability in the page view.

A. Add a new page for articles to English presentation

- change presentation if necessary

- choose parent page in a tree view if adding a sub-page

- click on new page link

- fill in the form with page information

- click on insert button

- fix mistakes if validation fails and try again

B. Create component for articles and assign that to the new page (added in scenario A).

- change presentation if necessary

- click on new component link

- select Articles module and fill in the form with component information

- click on insert button

- fix mistakes if validation fails and try again

- choose page in a tree view

- select free position and the newly created component

- click on assign button

C. Add new article to the new component (assigned in scenario B).

- change presentation if necessary

- choose page in a tree view

- navigate to the previously assigned component

- click on new record link

- fill in the form with article information

- click on insert button

- fix mistakes if validation fails and try again

- click on preview record link to check new article online

D. Edit existing article (created in scenario C).

- change presentation if necessary

- choose page in a tree view

- navigate to the previously assigned component and find the newly added article

- click on record editing link

- change information in the form as desired

- click on update button

- fix mistakes if validation fails and try again

- click on preview record link to check updated article online

6.7 Low Fidelity Testing

6.7.1 Testing Overview

Low fidelity testing focuses on testing with real users. Each testing session is an interview between a tester and a participant. The tester serves as a simple computer that manages user interface for the tested user. A paper mock-up is used for simulation of a real application and its features. The interface of the paper prototype resembles a real application, but only actions and elements important for the testing are provided. Other parts of the user interface are omitted. Participants test the prototype without previously knowing the real application.

6.7.2 Paper Prototype

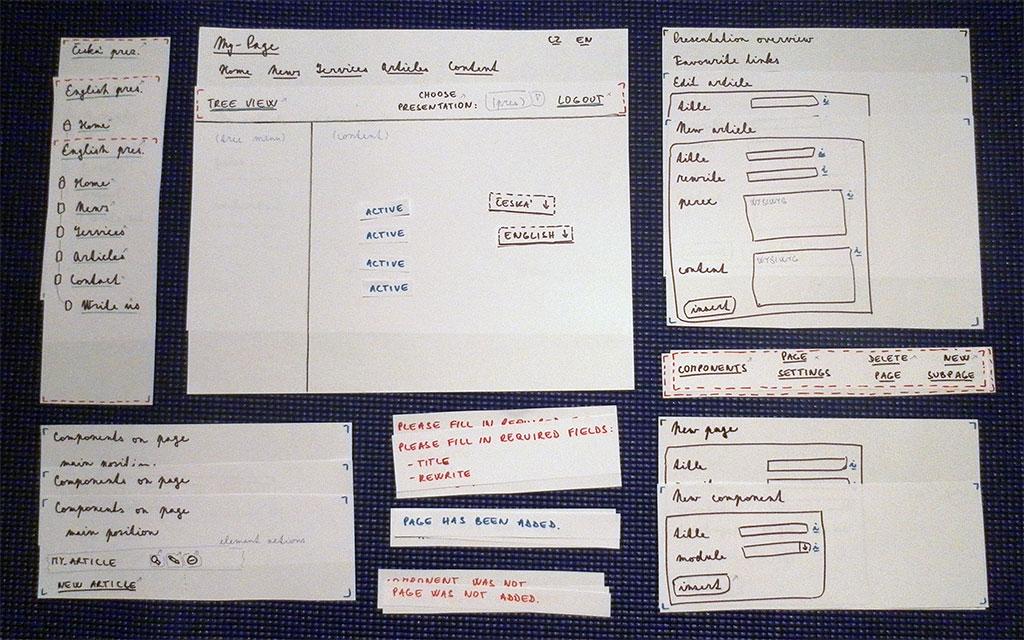

Paper mock-up serves as a low fidelity prototype for further user testing. The paper prototype provides basic views, user actions and feedback for testing scenarios. Several types of paper components exist, for example tree view, menus, forms, page content and form feedback. Figure 6.1 displays all paper components used in this type of testing, including menus, main screens, basic user interface elements, and feedback messages.

Figure 6.1: Paper mock-up overview with different types of paper components

6.7.3 Goals and Scenarios

The goal of the low fidelity testing is to find out whether the user interface is well designed and suitable for common users. There are four scenarios that will be used for testing that have been already described and are displayed in the following list. All scenarios are linked together. User works with a page, a component, or an article created in a previous scenario.

- Add a new page for articles to English presentation.

- Create component for articles and assign that to the new page.

- Add new article to the new component.

- Edit existing article.

6.7.4 Testing Plan

- start with relaxed questions and short discussion about the initial interview

- prepare paper prototype and all tools

- tell participant basic information how the testing works

- ask for permission for recording and making photos

- explain basic features of the tested application

- test all scenarios

- discuss and review user experience

6.7.5 Testing with User B

First participant of a low fidelity testing was User B. Before the testing, meal was served and the session began with relaxed discussion about the testing and the initial interview. User B was a bit tired and curious about what to expect, so he was shortly instructed about the testing. How long does it circa takes, what is being tested, how does it work and what is the output. Immediately after that he was told about the tested application, as it differs from his previously used content management systems. With some real-world examples, User B had no bigger problems understanding how the system works. All basic concepts such as pages, components and elements were discussed.

After this introduction, the user was shown and described in detail a paper mock-up and told about the roles of tester and user. User was asked and agreed with making photos and audio recording of the session. Due to a poor quality of the recording, only the camera was used during the session to make few photos. Then he was asked to think and speak aloud during the testing and ask for explanation or help if necessary. The scenarios were tested without showing their description to the user. Instead of giving detailed instructions to the use, he was shown scenarios and briefly told what to do.

The first scenario was adding a new empty page to the English presentation. Before starting, User B was curious about all links and features available on the prototype, so he was shortly told. Selecting a correct presentation went without any problem, same with adding a new page using the add page form. The user used help available next to the form inputs, more from curiosity than from not knowing what to do. Form validation was not triggered as the provided input did not contain any errors. Figure 6.2 shows User B after adding a new page.

Figure 6.2: User B added a new page for articles

Second task was to add a component to the newly created page. The user had some problems finding a new component link on the page. After the while he found the link in the presentation menu. But he expected that in the page menu, e.g. on the same place where he can select existing component. As with adding a page, the user did not have problems with filling in the form and adding a new component after he found the required link. After adding the new component, he had to assign it to the page. Third scenario was to add new article to the newly added page and component. This time, the operation was completely successful. For the last scenario, editing the new article, the user was asked to work completely without any instructions. The only mistake he did was that he forgot to use a preview button for to check changes in the article online.

After the testing of scenarios, the whole testing process has been shortly discussed. The user felt comfortable with the application layout, the tree view and forms, their help and validation. He thought that component handling could be implemented better. He was also very curious about other features and functions he had seen in the prototype. For this reason, several other features were briefly described to User B after the testing was finished. Beside this output, several tips and notes for the next session were taken. The whole session took about 80 minutes, not counting the dinner and discussion on other topics.

6.7.6 Testing with User A

Low fidelity testing with User A took part few days after the first testing. User A works as an editor, so the things worked slightly better than with User A. This session took place in the same place as the previous testing with User B. Again, the user was first asked about the initial interview. Then he was instructed how the testing and the paper prototype works. He knows what the user testing involves, so this went really quickly as well as introduction of the system and its features. User A was also asked about taking photos of the testing, thinking aloud and asking if necessary.

This time, a little different way of testing scenarios was chosen. User A is more experienced user of a content management system, so there was space for experimenting. He was only told basic instructions for each scenario without further brief or detailed suggestions. This tactics worked very well, as described in the coming text. All scenarios were exactly the same as in the previous testing.

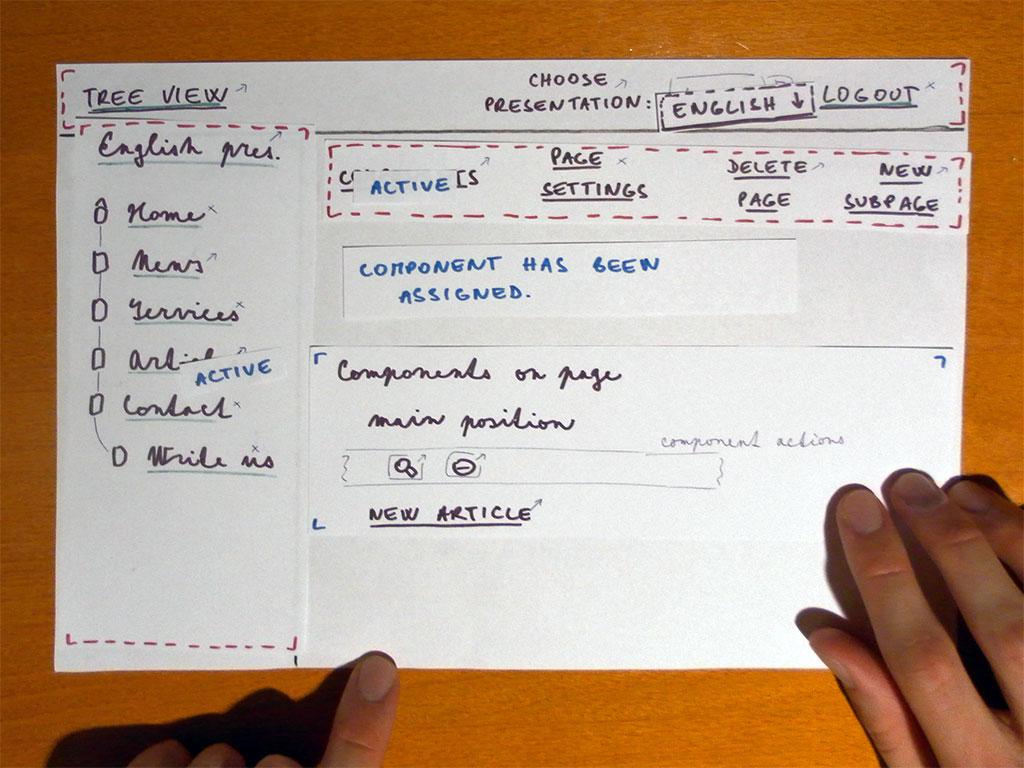

During the first scenario, User A did not encountered any bigger problem with the user interface. His interaction with the page tree view as well as with menus and forms was clear and straightforward. The user only added page under another page instead of directly to the presentation. This was his intention, not a consequence of a flawed interface design. The second task was again adding a new component. Unlike User B, User A had no difficulty finding a new component button. Even though, he suggested that would be great to add and assign component together in one action. And this action should be in the page menu, not the presentation menu. Figure 6.3 shows User A after adding a new component.

Figure 6.3: User A after adding a new component

The last two scenarios with adding and editing an article went very well without any problems. During the testing, user was very pleased with forms and action feedback, but a little concerned with management of components. Other features were also discussed in detail, such as layout of the user interface, menus and links. These follow common standards and work without problems in the eyes of the participant.

6.7.7 Testing Summary

These features of the user interface worked very well:

- global layout of the administration interface and menus

- main concept of presentations, pages, components and elements

- page tree view as an effective tool for page management

- brief and clear names of links and actions

- forms, their validation and help next to inputs

- straightforward and clear feedback messages

- preview feature where user can check edited content online

These features might improve:

- adding page to first versus another level could be better distinguished

- hint about the preview would be useful so the user does not forget using that

These features did not work very well:

- adding new component only from the presentation menu is a bad option

- assigning new component after its creation is confusing

- two actions for component creation and assigning should merge

Most features of the user interface worked very well. There were serious user experience problems with creation and assigning of new components. The low fidelity testing confirmed these problems, as they have been already detected before. The next part of the testing is high fidelity testing that will be performed using the existing application (with some advanced features possibly disabled). Anyway, more links, features and form options will be available. Let's see how the user experience changes with the real application.

6.9 High Fidelity Testing

6.9.1 Testing overview

High fidelity testing is a type of testing with real users that usually follows the low fidelity testing. In this concrete testing, a high fidelity prototype installed on a testing machine is used. In comparison with the paper prototype, this application has enabled all features, not only functions mandatory for the testing. The testing application uses a simple database for a small company website. Before this phase of testing, all participants have seen only a paper prototype, not the tested application itself. The tested version is 2.1 that includes many updates of the user interface since the low fidelity testing.

6.9.2 Goals and Scenarios

The main goal of the high fidelity testing is to again test if the page tree view is well designed and to gather ideas for improvements of its user interface. In contrast with the previous low fidelity testing, a real application instead of a simple paper prototype is used. All testing scenarios for high fidelity testing are exactly same as in the previous phase. See section User Scenarios for details of the scenarios.

6.9.3 Preparations

First, meeting times for testing were appointed. This time, the testing was planned to take part at the author's place. Then the testing application was prepared from the actual version of the system, database was purged and new content prepared. Several copies of the testing database were made, one for author and two for both participants. After setting up the application, the author has tested all scenarios to prove that everything works correctly. A table with a laptop, a lamp, drinking water, and a comfortable chair was prepared directly before the testing session. Figure 6.4 shows testing place after setting it up.

Figure 6.4: Place prepared for the high fidelity testing

6.9.4 Testing Plan

- start with off-topic discussion and shortly review previous testing

- prepare laptop with testing environment

- tell participant differences to the low fidelity testing

- repeat basic features of the tested system

- inform user that real application is used (with many other features)

- test all scenarios

- discuss and review user experience

6.9.5 Testing with User B

The first testing session with User B took place at author's place. Before the testing, everything was well prepared as described in section Preparations. First step of this session was short discussion about differences from the previous low fidelity testing. User B was told that all scenarios remain same as before and a real application is used for the testing. The fact that a real application has many more features than a simple paper prototype was also mentioned. Then few instructions about the testing laptop and environment were given. He was also asked if he wants to use a mouse or a touch pad for interaction with the tested application. User B tried briefly the touch-pad and then selected that.

As before the low fidelity testing, User B was given a short list of instructions for each scenarios. Knowing the paper prototype and the system philosophy, everything went much faster and without significant problems. Several ideas emerged from the short discussion after the testing of all scenarios. A little problem with user interface occurred, as User B had some difficulty differing the preview and detail icon when working with his article in the last two scenarios.

Icon for preview is a magnifying glass while icon for element detail is an eye. During the testing, he also denoted that presentation overview is empty and there should be some information instead of empty space. This is correct, the reason is the testing application had no favourite links or pending elements that would be displayed in this place. User B was really pleased after creating a new component because the user interface for this action has improved significantly. A large amount of interactive elements (icons, links) in the page detail imposed no problem for the user. This session took around 55 minutes.

6.9.6 Testing with User A

The second testing session with User A was arranged two days after the testing with User B. Everything was carefully prepared and then the session started. User A is more advanced user of content management systems, so the interview before giving user the testing scenarios was quite short. User A decided to directly go and work out the scenarios. He also preferred to use a mouse instead of a touch pad.

User A was given the same instructions for all scenarios as User B. He went through the testing smoothly and in addition tried some other features of the system beyond the given instructions. After the testing, various topics connected to the user interface were discussed. User A really liked the global layout of the administration, page tree flexibility and various tool tips and feedback messages throughout the administration. He denoted that adding a new component is slightly better than before. Now this operation takes only one step instead of three steps like before the improvement. He suggested few other ideas: more descriptive tool tips next to form fields, hiding other positions when editing a record (e.g. article) or disabling adding a component to redirected page as this component will never be displayed in the front-end. User A also briefly tested the work-flow system when working with articles. This session took around 45 minutes.

6.9.7 Evaluation of Testing

These features of the user interface worked very well:

- main concept of presentations, pages, components and elements

- layout of the user interface, menus and page tree view

- brief and clear links, buttons and icons

- forms, descriptive validation and feedback messages

- creating and assigning a new component in comparison with previous state

- helpful tool-tips both in the page tree and in forms

Some ideas and possible improvements:

- better icons for detail and online preview

- hide parts of the interface that are not necessary for the current action

- more descriptive tool-tips next to form fields

User experience in the high fidelity testing is slightly better than the results of the previous low fidelity testing. All tested features of the user interface worked well, although there are some details that would be improved in version 2.2 of the Urchin system.

[Pages 63-80]